Building RAG Applications with Website Content

A Comprehensive Guide to Web Scraping, Chunking, and Vector Embeddings for RAG Applications

Introduction

The recent advancements in Large Language Models (LLMs) have unlocked exciting possibilities for sophisticated natural language applications. These models, such as ChatGPT, LLAMA, and Mistral, are revolutionizing how we interact with AI, from generating human-like text to powering personalized chatbots. However, a major limitation persists: these models are restricted to the knowledge they were trained on and cannot update themselves with new information. This limitation hinders their ability to respond to time-sensitive or domain-specific queries.

This is where Retrieval-Augmented Generation (RAG) comes into play. RAG enables us to input real-time contextual information into LLMs, allowing them to offer more pertinent and precise answers. One valuable source of contextual information is website content.

In this guide, we will explain how to extract content from websites and utilize it to improve the responses of LLMs in an RAG application. We will cover everything from the basics of web scraping to chunking strategies and creating vector embeddings for efficient retrieval. Let’s get started!

Web Scraping Fundamentals

To integrate website content into an RAG system, the first step is to extract the content. This process is known as web scraping. While some websites offer APIs for accessing their data, many do not. In such cases, web scraping becomes very valuable.

Several popular Python libraries can assist in extracting web data. In this case, we will use Beautiful Soup for parsing HTML content and requests for making HTTP requests. Advanced tools such as Selenium (for dynamic content) or Scrapy (for larger-scale scraping) can also be utilized.

Example: Scraping Wikipedia

Let’s start by scraping a Wikipedia page using BeautifulSoup.

import requests

from bs4 import BeautifulSoup

# Send a request to the Wikipedia page for Data Science

response = requests.get(

url="https://en.wikipedia.org/wiki/Data_science",

)

# Parse the HTML content

soup = BeautifulSoup(response.content, 'html.parser')

# Get textual content inside the main body of the article

content = soup.find(id="bodyContent")

print(content.text)This code sends a request to Wikipedia, fetches the content from the Data Science page, and extracts the main body text for further processing.

Chunking: Breaking Down the Content

After successfully scraping some content, the next step is to break it into chunks. Chunking is important for several reasons:

- Granularity: Breaking the text into smaller pieces makes it easier to retrieve the most relevant information.

- Improved Semantics: Using a single embedding for an entire document can cause the loss of meaningful information.

- Efficiency: Smaller text chunks lead to more efficient computation during the embedding process.

Fixed-Size vs. Context-Aware Chunking

The most common chunking methods are fixed-size and context-aware chunking. Fixed-size chunks split text at predefined intervals, while context-aware chunking adjusts the chunk size based on sentence or paragraph boundaries.

For this guide, we’ll use the RecursiveCharacterTextSplitter from the LangChain framework to perform chunking, ensuring that splits occur at logical points in the text.

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=512, # Set chunk size to 512 characters

length_function=len

)

chunked_text = text_splitter.split_text(content.text)This code splits the scraped text into chunks of approximately 512 characters, adjusting the splits based on natural breakpoints.

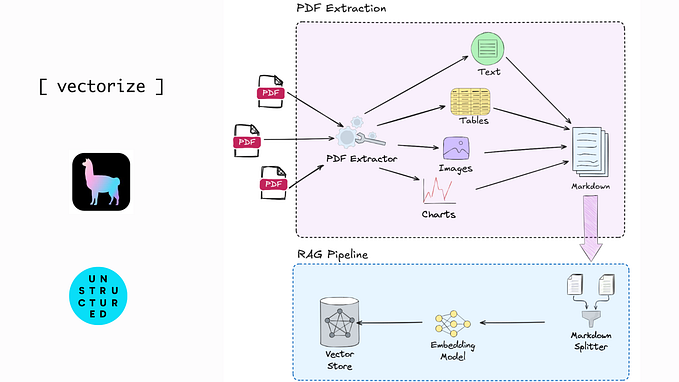

From Chunks to Vector Embeddings

Once we have the text chunks, the next step is to convert them into vector embeddings. Embeddings are numerical representations of the text that capture its semantic meaning, allowing for efficient similarity comparisons.

Types of Embeddings

There are two primary types of embeddings:

- Dense Embeddings: Generated by deep learning models like those from OpenAI or Sentence Transformers. They encode semantic similarity well.

- Sparse Embeddings: Generated by classical methods like TF-IDF or BM25. They are effective for keyword-based similarity.

For our RAG application, we’ll use dense embeddings generated by the all-MiniLM-L6-v2 model from Sentence Transformers.

from langchain.embeddings import SentenceTransformerEmbeddings

# Load the model for generating embeddings

embeddings = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

# Create embeddings for the third chunk of text

chunk_embedding = embeddings.embed_documents([chunked_text[3]])This code converts one of the chunks into a dense embedding using the MiniLM-L6-v2 model. In practice, you would generate embeddings for all chunks.

Storing and Retrieving Embeddings with Milvus

Once we’ve generated the embeddings, we need to store them in a vector database for efficient retrieval. Milvus is an open-source vector database that specializes in storing and searching embeddings. It integrates well with LangChain, making it an excellent choice for RAG applications.

Here’s how to store your chunk embeddings in Milvus:

from langchain.vectorstores.milvus import Milvus

# Store the embeddings in Milvus

vector_db = Milvus.from_texts(texts=chunked_text, embedding=embeddings, collection_name="rag_milvus")This code creates a collection in Milvus and stores all the chunk embeddings for future retrieval.

Building the RAG Pipeline

With the chunks stored and embeddings ready, it’s time to construct our RAG pipeline. This pipeline will retrieve the most relevant embeddings based on user queries and pass them to the LLM to generate responses.

Step 1: Set Up the Retriever

We first need to set up a retriever that fetches the most relevant embeddings from the vector database based on the user’s query.

retriever = vector_db.as_retriever()Step 2: Initialize the LLM

Next, we initialize our language model using OpenAI’s GPT-3.5-turbo:

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-3.5-turbo-0125")Step 3: Define a Custom Prompt

We need to create a prompt template that will guide the LLM to generate appropriate answers based on the retrieved content.

from langchain_core.prompts import PromptTemplate

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

Always say "thanks for asking!" at the end of the answer.

{context}

Question: {question}

Helpful Answer:"""

custom_rag_prompt = PromptTemplate.from_template(template)Step 4: Build the RAG Chain

Finally, we’ll create the RAG chain that will retrieve the most relevant chunks, pass them to the LLM, and output the generated response.

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| custom_rag_prompt

| llm

| StrOutputParser()

)With the RAG chain set up, you can now send a query to the pipeline and receive an answer based on the website content.

for chunk in rag_chain.stream("What is a Data Scientist?"):

print(chunk, end="", flush=True)Conclusion

Sure, here is the rewritten text:

In this guide, we covered the process of extracting website content and using it to improve LLM responses in an RAG application. We discussed web scraping, text chunking, generating vector embeddings, and storing those embeddings in a vector database like Milvus.

By using this technique, you can develop more informed and contextually aware AI applications. Whether you’re creating a chatbot or a question-answering system, RAG boosts the relevance and accuracy of the generated responses.

It’s important to remember that the success of your RAG pipeline relies on the quality of your data and how you organize the chunks, embeddings, and retrieval process. Experiment with different models, chunk sizes, and retrieval methods to refine your system.

Happy coding, and thanks for reading!

References

[1] Kotaemon: Open-source GraphRAG UI On Local Machine

[2] Building a Customized Knowledge Base with RAG, Llama 3, FAISS, and Langchain

[3] LlamaIndex & Chroma: Building a Simple RAG Pipeline

[4] Advanced RAG Techniques to Improve the Performance of Generative Models

[5] Building Powerful RAG Applications with Haystack 2.x