Llama 3.1: Meta’s Advanced Open-Source AI Model

Meta has launched Llama 3.1, a powerful open-source AI model designed to benefit developers, Meta, and the wider community. Mark Zuckerberg’s letter explains that open-source AI can drive innovation and collaboration. Llama 3.1 features an impressive context length of 128,000 tokens, supports eight languages, and includes the Llama 3.1 model, which is the largest and most advanced open-source AI model available. This model offers exceptional flexibility, control, and state-of-the-art capabilities, enabling new applications like creating synthetic data and refining other AI models.

Meta is expanding the Llama ecosystem with additional tools, including a reference system for easier integration and robust security features like Llama Guard 3 and Prompt Guard to promote responsible development. They are also releasing a standard interface, the Llama Stack API, to make it easier for other projects to use Llama models. Over 25 partners, including major tech companies like AWS, NVIDIA, and Google Cloud, support this initiative. People in the US can try Llama 3.1 by asking challenging math or coding questions on WhatsApp and at meta.ai. Meta’s open-source approach aims to push AI capabilities forward, with Llama 3.1 leading the charge as the most capable open-source AI model.

Introducing Llama 3.1

Meta has released Llama 3.1 405B, an open-source AI model that matches top proprietary models in areas like general knowledge, steerability, math, tool use, and multilingual translation. This model aims to drive innovation and enable new applications, including synthetic data generation and model distillation.

Alongside the 405B, upgraded versions of the 8B and 70B models are also released, featuring a longer context length of 128K and enhanced capabilities. These models support advanced uses such as long-form text summarization and coding assistants. Meta has updated its license to allow developers to use outputs from Llama models to improve other models.

Key Features of Llama 3.1

- Llama 3.1: Llama 3.1 405B is the largest open-source AI model available, offering top-tier general knowledge, steerability, math, tool use, and multilingual support. It facilitates new workflows like synthetic data generation and model distillation, advancing the efficiency of developing and training smaller models.

- Enhanced Multilingual Support: The 8B and 70B models in Llama 3.1 support eight languages and feature a 128K context length, enhancing their ability to handle complex tasks such as long-form summarization and multilingual interactions, thereby improving performance and usability.

- Open Ecosystem: Meta’s open AI ecosystem involves over 25 partners, including AWS, NVIDIA, and Google Cloud, providing immediate deployment and development support for Llama 3.1. This collaboration promotes innovation and broadens access to advanced AI technologies.

- Robust Training and Evaluation: Llama 3.1 405B was trained on 15 trillion tokens with 16,000 H100 GPUs and evaluated across 150 benchmarks. It competes with top models like GPT-4 and Claude 3.5 Sonnet, benefiting from advanced training techniques that enhance its performance and stability.

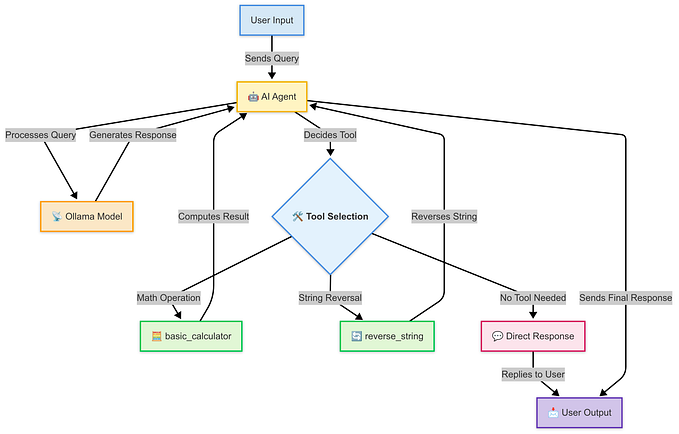

Model Architecture

The image depicts the architecture of a decoder-only transformer model, which is used in Meta’s Llama 3.1. Here’s a step-by-step explanation of the process:

- Input Text Tokens: Raw text is tokenized into smaller units called tokens.

- Token Embeddings: Each text token is converted into a dense vector representation (embedding).

- Self-Attention Mechanism: Each token embedding is processed through a self-attention layer to weigh the importance of each token about others.

- Feedforward Network: The output from the self-attention layer is passed through a feedforward neural network for further non-linear transformation.

- Repeating Layers: The self-attention and feedforward network layers are repeated multiple times to capture more sophisticated features and relationships.

- Output Text Token: The final layer generates an output token based on the learned patterns and context.

- Autoregressive Decoding: The output token is fed back into the model as input for the next step, generating the text output one token at a time until the sequence is complete.

How to Get The Model

You can download the models from these websites:

Try it Yourself

Llama 3.1 is now available in the Groq Playground.

Although the 405B parameter model is not currently available in the playground, you can try it on Groq Chat.

Installation Process

Conclusion

Llama 3.1 represents a significant leap forward in open-source AI, combining state-of-the-art capabilities with an open and collaborative ecosystem. We invite developers to try the Llama 3.1 models today and contribute to shaping the future of AI. Together, we can build more innovative, safe, and accessible AI solutions.